What is Regression Testing? Everything You Need to Know

Software regression testing is the process of testing your software features and functions to ensure that new recently introduced changes to the code have not inadvertently broken or detrimentally impacted existing working application features.

For example, if an ecommerce website's product team decides to implement a new 'click and collect' feature for its customers, regression testing will quickly tell the delivery team whether any existing and closely related areas of functionality such as 'order confirmation screens/emails' or 'ship to address' have been unintentionally broken in the process of adding this new 'click and collect' feature.

In simplest terms, 'software regression' is the concept of overall application quality getting worse or regressing, when quite clearly, the product owner's aspirations will be to continually improve and enhance the user's experience. When a delivery team continues to make changes to the application, regression testing will always be necessary.

All too often, the excitement of adding new platform features for customers and the necessary speed-to-production required to stay one step ahead of the competition, means that there can be a high risk of software quality regression if the company does not have a robust and consistently applied approach to holistic regression testing.

Regression testing is not always just a functional type of testing either. Let's consider Security, Performance and Visual regression testing. If an individual page's average load time has increased by 33% from 1.5 seconds to 2 seconds after some recent changes, a company will likely consider that a performance regression. If the introduction and use of a new JavaScript library results in a number of new code vulnerabilities, this would rightly be considered a security regression if those vulnerabilities didn't exist previously. In a mobile-first responsive world, if the mobile UI layout has been unintentionally altered and is now out of step with the mobile design because of intended changes to the desktop view of the application, this could be considered a visual regression.

In each of the above examples, the application functionality remains completely stable and working as expected, however other aspects of software quality, namely performance, security or visual can be said to have regressed. A solid regression testing strategy for a company with a mature testing strategy should consider these different types of testing.

What is the difference between regression testing and re-testing?

Retesting is the process of testing a feature or function again because the test result upon execution, did not match the expected result.

When a tester executes any kind of test, if it 'fails', they will immediately 'retest' it to ensure that the failure is recreatable. Now, a process of diagnosis begins. Testers will need to determine the reason for the failure. Maybe the test environment is not performant enough, the data could be invalid or there may be a legitimate bug to be opened with the developers.

The next 'retest' will occur when the reason for the failure has been addressed by the developers. If an accepted bug has been fixed, checked in and the test environment has been rebuilt, the tester can now 'retest' that feature and close the bug.

At this stage, the test has concluded successfully for the first time and in future cycles of testing, that feature will now be 'regression' tested to ensure that it continues to pass and does not break again with the introduction of new changes in the future.

Why is regression testing important?

Frequent and consistent regression testing enables delivery teams to understand rapidly if they have unintentionally introduced breaking changes to the application.

With good requirements, software design, unit tests, code reviews and regression tests, organisations stand the best chance of continually delivering a high quality product with regular feature enhancements to their customers.

The cost of resolving bugs nearer to production increases exponentially. Regression testing helps you find these bugs at the earliest possible detection point.

Very few companies in the technology space enjoy minimal competition. With attractively priced Saas products becoming more and more prevalent for desktop and mobile devices, disenfranchised customers can quickly and easily switch to a competing product overnight. There is plenty of competition at the product feature, product quality and product price levels.

A solid regression testing strategy assists companies in remaining competitive.

When should you do your regression testing?

The extent to which a testing team needs to undertake regression testing, is driven directly by the frequency with which the developers are committing code changes and deploying them to a test environment. A new build of code might only deliver half of a new feature, but it also brings an opportunity to immediately regression test other areas of the application.

If a company subscribes to an agile software delivery way of working, the best time to regression test is with every deployment of code to an environment. By failing to regularly regression test, a delivery team is simply adding to its technical debt further down the line. It is well understood that the cost of fixing a bug rises significantly as time progresses and the nearer a feature is to production release.

Regression testing early and frequently with consistent test coverage means that a delivery team can find and fix regression bugs at the earliest opportunity whilst the cost is still relatively low.

For the avoidance of doubt, let's state that once again. Regression testing should be undertaken with any new deployment of code to a test environment. If code is deployed 5 times on a given day, a suite of regression tests should also be executed 5 times. In a more waterfall-like model of software delivery, maybe code is only deployed once every few weeks. In this situation, there would be a correspondingly less frequent need for regression testing.

Now we have a clear view of when we should execute our regression testing, we should consider the practical aspects of how these tests are run.

What's the difference between manual and automated regression testing?

Any type of regression testing whether functional, performance or visual for example, can be undertaken on a manual or automated basis. Manual regression testing is undertaken by a team of human testers without any automated tooling. They will typically run through each test in the regression test suite until they have a successful result and any bugs that they raise along the way have been fixed and retested.

Manual regression testing will take significantly longer than automated testing for the same number of tests. It is also a more expensive undertaking given the need for human effort to complete it. A final challenge with manual regression testing is that it is not always consistent and repeatable.

What do we mean by repeatable? Well, in the event that a cycle of manual regression testing is 75% complete, having taken 4 days and 3 testers, and a bug is then found, it is very likely that when the bug is fixed and a new build is deployed to the test environment, just the bug fix itself and the remaining 25% of the regression tests will be executed before the entire regression testing cycle is deemed complete.

The problem with this is that we have just decided that good practice would be to execute our regression tests with a new build of code to an environment however, the appetite to re-run 4 days and 3 testers’ worth of manual effort will simply not be endorsed by a product team that needs to get that release out to its customers. There is then a stand-off between potential product quality and the need to release now.

When a team of manual testers is consumed in a cycle of regression testing for a planned release, the tendency is almost always to focus purely on the scheduled regression tests. Whilst this sounds an obvious and logical statement to make, we frame it as a challenge because there is little time or intent to test the application on a more heuristic and exploratory basis.

The biggest return-on-investment in any type of manual testing comes not from when humans are running repetitive manual regression tests, but when they are exploring the application as a user will and finding edge case bugs which may well prove to be more critical to address.

Manual regression testing cycles can rapidly become a bottleneck to frequent feature delivery and suppress any meaningful and high return exploratory testing. This is why a high percentage of automated regression testing is deemed a must-have for an agile delivery team that needs to release quality features to a demanding time-critical schedule. It will help ensure regression test frequency, test consistency and enable more manual exploratory testing given that testers do not then need to worry about the 'scripted' tests. They can go explore.

Agile companies with a very mature testing practice will typically automate 70-80% of their regression tests. In agile delivery, automated tests should be written at the earliest opportunity, ideally in the same sprint as when the development team delivered the functionality. This then ensures that an automated test for a new feature exists and can be re-run against all subsequent code deployments to an environment. We call this continuous automated testing and it is a critical part of a continuous integration/continuous deployment delivery model.

Different businesses will have differing approaches to the creation of these automated tests. Some will incorporate it as part of the development team effort whilst others will build a team of technical testers who can write automated test code and test with an independent perspective on quality. We would suggest that the latter is always the best approach.

We have seen that automated tests can be of differing types - functional, performance, visual, security - they can also assert both positive and negative test conditions and automated tests can also extend ‘test coverage’ quickly and easily with the repetition of tests using differing data sets for products and customers etc. This is again an unrealistic expectation of a manual testing approach.

How to plan and prioritise your regression testing

The approaches below consider the testing of the full application stack with user interface where automated tests can be a little slower, impact of poor environment performance can be higher and tests can sometimes be a little brittle. It assumes that all automated unit tests and service layer tests have been run in their entirety.

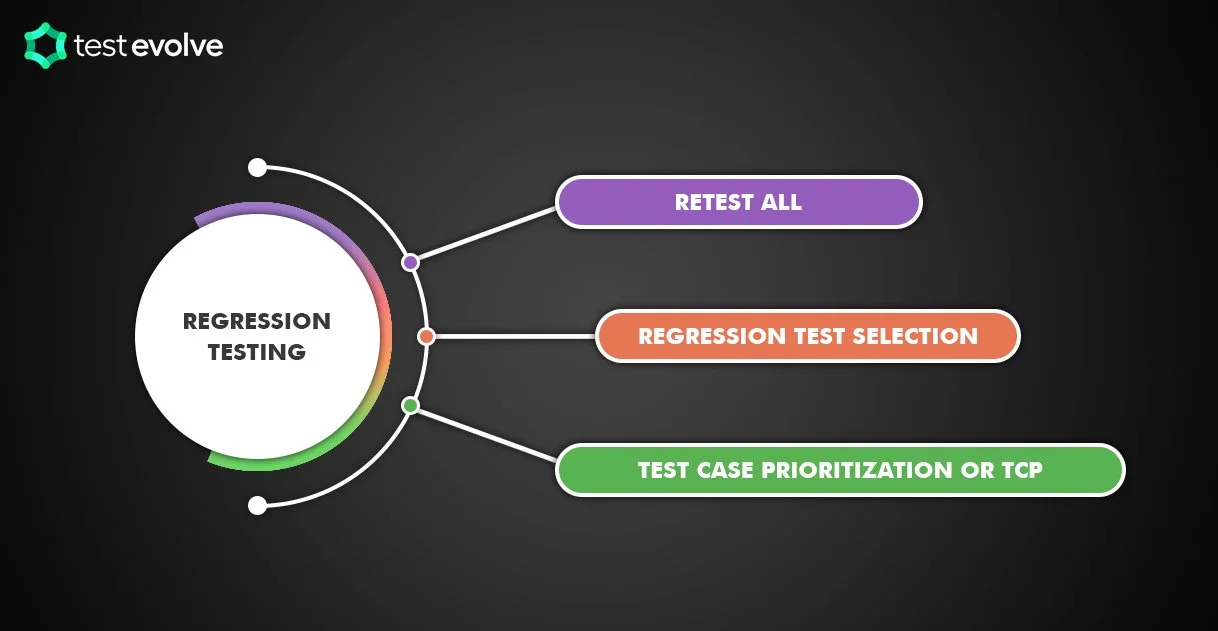

Whilst it is true that with an automated approach to regression testing, you can quickly and easily run an entire suite of regression tests, it is still possible to take a more targeted and efficient approach to test selection and execution. Most test teams with an automated regression testing suite in place will consider a combination of the below methods.

Generally speaking, as you move through the options, the risk increases marginally but the speed of response increases as well.

Run all regression tests

If your entire suite of tests runs in a relatively short time frame, say 20-30 minutes, this might be absolutely fine. But the reality is that with a team of dedicated automation engineers and sprint-by-sprint increase in test coverage, after a few short sprints, your full cycle run time might rapidly increase to a number of hours. At this stage it runs the risk of not serving its purpose of providing the fastest quality feedback that it can do. However, you will benefit from full test coverage on every build.

Run a critical set of regression tests

When test volume ramps up quickly, a common next step is to segment your entire regression test suite into 2 categories - critical and non-critical tests.

All testing whether manual or automated should have a degree of risk based prioritisation to it in order to detect bugs as soon as possible. Areas of application functionality can be given a risk score of 1-9. That score is determined by multiplying a probability score (1-3, low-med-high) by an impact score (1-3, low-med-high). A feature that is more likely to contain bugs because of complexity or related change (probability - 3) and is highly problematic to end users if a bug makes it to production (impact - 3) should be your highest priority for test selection.

Test teams often then run just the critical tests on any build of code to the test environment and maybe run all of the tests on a frequency schedule - daily, every other day, weekly etc. A critical set of tests can also be set to include areas of application functionality that have regressed previously and as such have now been elevated to critical status for continued testing with future builds.

The reduced critical pack gives you your quick, critical feature feedback and you have peace of mind knowing that all of your tests are still being run, albeit on a slightly reduced frequency. This is a great middle road risk based approach.

Run a change related set of regression tests

In essence, this is a more extreme version of option 2. If you know that code changes are coming that are contained to only a certain application feature and completely detached from everything else, for each build you might choose to execute only those regression tests that you know to be directly related to the area of change. Then on a scheduled basis, you might run your critical pack and on a less frequent basis, you will run all of your tests. The primary risk here is that you must be very technically aware as a tester of the incoming change, and confident in your ability to successfully select tests that will provide sufficient feedback on application quality. The saving in time to run the regression tests might not justify the increased risk of not finding a regression bug until the next day.

The approach that is right for your testing team will be different to others and will very much depend upon the frequency and type of change, coupled with an awareness of previous application quality issues and your product team’s desire to release to production as soon as possible.

Conclusion

It’s not hard to see that effective and timely regression testing are critical to the ongoing delivery of a competitive product for many businesses. For the majority of delivery teams across most industry verticals, aspirations of agile software delivery with fast, frequent and high quality shippable builds are now the norm. Slow manual regression testing and late bug detection will hinder timely delivery and bugs that make it to production damage your product and brand reputation in an ever competitive market with plenty of alternatives for customers to choose from.

Complete test automation solutions like Test Evolve will assist you to dramatically increase your automated regression testing coverage in a short space of time and our Halo real time results dashboards help you to share clear views of application quality in the moment, with full traceability to your requirements and transparency of your test coverage. Why not sign up for a free trial now?