A Stable Test Environment: Key to Agile Development Efficiency

If you’ve ever been part of an agile development team, you know how fast things can move. It’s like a constant race against time to deliver new features, fix bugs, and keep everything running smoothly. But those agile aspirations can crash down if your test environment isn’t stable.

Imagine trying to build a house on quicksand—a disaster waiting to happen.

A stable test environment is the bedrock of successful agile development. It aids your test reliability, ensures your feedback is accurate and allows your team to focus on what they do best—creating feature rich software.

Without it, you’re swamped with false positives, wasted time and a whole lot of frustration. Let’s dive into why having a rock-solid test environment is not just important, but absolutely essential for any agile team.

Understanding Test Environments

A test environment is a comprehensive setup of both software and hardware where the testing team evaluates the functionality of a new application. This environment is designed to closely mimic the real-world conditions under which the application will operate once it is released. It includes all the necessary components for thorough testing: hardware, software, network configurations, and the application itself.

The primary goal is to create a controlled setting where testers and developers can identify and resolve bugs in the software before it goes live.

Components of a Test Environment

The hardware component includes servers, computers, mobile devices, and any other physical components required to run the application. Software encompasses operating systems, databases, and other software dependencies that the application relies on. Network configurations replicate the production environment, including firewalls, routers, and switches. Finally, the application itself, including all its modules and features, is a crucial part of the test environment.

Importance of a Stable Test Automation Environment

A stable test automation environment is a well-maintained and consistent setup specifically for automated testing. It encompasses all the necessary hardware, software, tools, and resources required to run automated tests efficiently. This stability is crucial for both functional and non-functional testing, ensuring that automated tests are executed consistently and produce accurate results.

Each test run should yield reliable and reproducible outcomes, eliminating the risk of false positives or false negatives. This reliability saves time and effort for the testing team, allowing them to focus on more critical tasks.

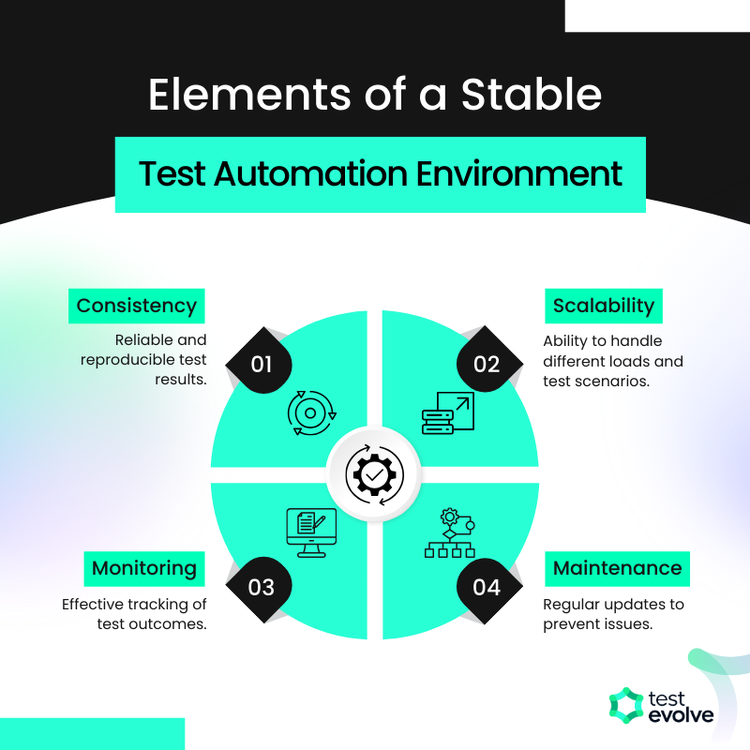

Key Elements of a Stable Test Automation Environment

Consistency is key - the environment should be consistent across all test runs to ensure that results are reliable and issues when found can be recreated. Regular updates and maintenance of the environment are essential to keep it stable and functional. The environment should include all necessary testing tools and resources, such as test automation frameworks, scripts, and libraries.

Scalability is also important, as the environment should be able to handle varying loads and different testing scenarios. Effective monitoring and logging mechanisms should be in place to track the performance and outcomes of automated tests.

Benefits of a Stable Test Automation Environment

A stable test automation environment ensures that test results are accurate and reliable. It saves time by reducing the need for manual testing and re-testing, making the process more efficient. This approach is also cost-effective, as it reduces the cost associated with fixing bugs and issues post-release. Ultimately, it enhances the overall quality of the software by catching defects early in the development cycle.

Things that might make your environment unfit for purpose

Although a stable test environment offers many advantages, it’s essential to recognize the factors that can potentially undermine its purpose. Recognizing these factors helps in maintaining a reliable and efficient testing process.

One major factor is inconsistent configuration. Divergences in software configurations, operating system versions, or hardware setups across testing infrastructure can lead to inconsistent test results. This inconsistency makes it difficult to accurately identify and resolve issues, as the same test might produce different outcomes across different environments.

Shared environments can also pose significant challenges. When several testers utilize the same environment at the same time, it can result in conflicts and unpredictable test results. This issue is particularly problematic when testers do not coordinate their activities, resulting in overlapping tests and resource contention.

Insufficient infrastructure is another critical factor. Inadequate infrastructure, such as low memory or slow processing speeds, can significantly impact the performance of a testing environment. This insufficiency can result in longer testing times and hinder the effectiveness of the testing process, making it difficult to meet project deadlines.

Outdated software can cause compatibility issues, leading to erroneous test results and prolonged debugging processes. Keeping the test environment updated with the latest software patches and updates is essential to avoid these problems and ensure smooth operation.

Uncontrolled test data can result in corrupted or irrelevant data, which impacts test accuracy. Inadequate management of test data can lead to unreliable test results. Testers should regularly clean up old or unnecessary data to maintain accurate and reliable test outcomes.

Network issues, such as fluctuations in network stability or speed, can affect tests, especially those relying on internet connectivity. Testers need to monitor their network status and perform tests in a stable environment to prevent false positives or negatives.

External events like network outages, power cuts, or server downtime can also disrupt the test environment and impact the reliability of test results.

Finally, a lack of version control can lead to discrepancies in test results. Without proper version control, different versions of code and dependencies can be present in the testing environment, causing inconsistencies. Implementing robust version control practices ensures that all team members are working with the same codebase and dependencies, leading to more reliable test outcomes.

Ensuring Test and Test Data Independence

Test independence is an often overlooked aspect of effective software testing. Each test should be designed to manage its own required test data, ensuring that it operates independently of other tests. This approach minimizes dependencies and reduces the risk of one test affecting the outcome of another. With independent test data management, testers can ensure that each test case is executed as expected and provides reliable results that reflect the true behavior of the application under test.

Each test should also include a ‘clean up’ or ‘tear down’ process after it has run. Regardless of the test outcome itself, this involves resetting the environment to its original state, removing any temporary data, and releasing resources that were allocated during the test. Proper tear down procedures prevent data from interfering with or influencing subsequent test outcomes and maintain environment integrity.

What is Continuous Integration/Deployment and how does my test environment need to support it?

Jenkins is an example of a widely-used open-source automation server that plays a pivotal role in Continuous Integration (CI) pipelines, enabling teams to automate various stages of software development, including building, testing, and deploying applications. When integrated into a CI pipeline, Jenkins orchestrates the workflow, ensuring that code changes are automatically tested and deployed to a test environment, thereby reducing manual intervention and increasing the speed of delivery.

Jenkins can be configured to monitor a source code repository, such as Git, for any changes. When a developer pushes new code, Jenkins triggers a build process. This involves compiling the code and packaging it into deployable artifacts, such as JAR, WAR, or Docker images. Jenkins can also integrate with various build tools like Maven, Gradle, and npm to streamline this process.

After the build, Jenkins can automatically execute a suite of tests, including unit, integration, and acceptance tests. Jenkins supports various testing frameworks, such as JUnit, Selenium, and TestNG, allowing teams to ensure that the new code does not introduce regressions or break existing functionality. If any tests fail, Jenkins can halt the pipeline and notify the development team, ensuring that defects are caught early.

Once the code passes the tests, Jenkins can automate the deployment to a test environment. This deployment can involve various steps, such as copying artifacts to a server, running deployment scripts, or spinning up containers using tools like Docker or Kubernetes. Jenkins’ flexibility allows it to be easily integrated with cloud services, virtual machines, or containerized environments, enabling a consistent deployment process across different environments.

The test environment must be able to scale dynamically to accommodate the frequent deployments triggered by the CI pipeline. Containerization tools like Docker and orchestration platforms like Kubernetes are often employed to create consistent, isolated test environments that can be spun up and torn down on demand. This ensures that tests are run in environments that closely mimic production, reducing the chances of environment-related issues.

To accelerate feedback, the test environment should support parallel testing, where multiple test suites can run simultaneously. Jenkins can orchestrate parallel builds and tests across different nodes or containers, distributing the workload and reducing the overall testing time.

For effective debugging and analysis, the test environment should integrate with monitoring and logging tools. Jenkins can be configured to capture logs, metrics, and reports from the test environment, providing insights into test failures and performance bottlenecks.

The configuration of the test environment should be version-controlled and automated, often managed through Infrastructure as Code (IaC) tools like Terraform or Ansible. Jenkins can trigger the setup and teardown of these environments, ensuring that each test run starts with a fresh, consistent environment.

Poorly Managed Test Environments and the impact on different Test Automation Framework types

A poorly managed test environment can significantly impact both No Code and Coded test automation frameworks, though the effects differ due to the unique characteristics of each approach.

In No Code test automation frameworks, the simplicity and ease of use that make them appealing can also become a drawback in unstable environments. These frameworks rely on visual interfaces and pre-built components, which abstract away the complexities of the underlying infrastructure. When the test environment is poorly managed, with inconsistencies or instability, tests can fail unpredictably.

Diagnosing the root cause of these failures becomes challenging, as users may struggle to determine whether the issue lies with the application, the environment, or the limitations of the No Code tool itself. Furthermore, No Code frameworks often assume a stable environment, and when that assumption is violated, tests may become unreliable.

The limited customization options in these frameworks also make it difficult to adapt to environment-specific issues, which can result in incorrect test outcomes and increased manual intervention.

Coded test automation frameworks, while more flexible, also face challenges in an insufficient environment. These frameworks allow for detailed customization and interaction with the environment, but this comes with its own set of complexities. In an unstable environment, the additional control offered by Coded frameworks can lead to more complex debugging.

Testers may need to sift through extensive logs to distinguish between application bugs and environment-related failures, which can slow down the testing process. Additionally, inconsistent environments may require testers to write extra code to handle various configurations or infrastructure issues, increasing the maintenance burden.

While Coded frameworks offer the ability to implement advanced solutions like environment health checks or dynamic configurations, doing so requires time and effort that could detract from the primary goal of testing.

As a coded test automation solution, with a unique web app test recorder for Cucumber with Ruby, JavaScript or TypeScript - a free fully featured Test Evolve Evaluation is available for 30 days. Get testing now!