A Mandatory Checklist for Evaluating a Test Automation Tool

Selecting the right test automation tool is essential for building a scalable and efficient testing strategy. At Test Evolve, with years of experience in software development, we have seen how the right tool can lead to more streamlined processes, less manual effort, and better software quality.

Conversely, the wrong choice can lead to inefficiencies, false positives, and high maintenance costs. In fact, 22% of companies cite test instability as their biggest challenge—often due to automation tools that lack proper CI/CD integration, causing delays and unreliable results.

To help you make an informed choice, here’s a 12-point checklist for evaluating test automation tools. This framework allows you to compare options objectively and select the one that best fits your team's requirements while ensuring long-term scalability.

Let’s get started!

Q1. Does the Tool Fit Your Testing Needs & Tech Stack?

A test automation tool must align with your testing scope—whether it’s functional, API, UI, performance, or regression testing. Relying solely on one type (e.g., UI automation) risks missing backend failures, while weak API support can leave integration bugs undetected.

Cross-browser and cross-platform testing is equally critical. Just because a test passes on Chrome or Windows doesn’t mean it will behave the same way on Safari, macOS, or Android. A strong tool should support real-device testing to avoid false positives from emulators.

Beyond execution, seamless CI/CD integration is a must. A tool that doesn’t work well with Jenkins, GitHub Actions, or GitLab can slow down deployments instead of accelerating them. Poor compatibility with existing frameworks and bug-tracking tools can create unnecessary friction in development workflows.

Takeaway: A good test automation tool doesn’t just run tests—it integrates seamlessly into your development ecosystem. If it doesn’t work well with your CI/CD, frameworks, and reporting systems, automation becomes a burden instead of a solution.

Q2. Is the Tool Within Your Budget?

Budgeting for a test automation tool goes beyond licensing costs. While open-source options may seem cost-effective, they often require significant setup and ongoing maintenance. Paid tools may have upfront fees but provide support, documentation, and easier adoption.

When comparing testing automation tools, consider not just licensing costs but also infrastructure requirements, training expenses, and long-term maintenance overhead. ROI should not only measure cost savings but also include faster execution times, reduced manual effort, and fewer post-release defects.

Choosing a tool that reduces test maintenance while improving efficiency will be far more cost-effective than an initially cheaper but high-maintenance solution.

Takeaway: The cost of automation goes beyond licensing. Infrastructure, training, and maintenance can significantly impact ROI in ways teams often underestimate. Open-source tools may seem cost-effective initially, but they often require in-house expertise for setup and upkeep. Paid solutions may offer built-in support, but that doesn’t always guarantee easier adoption. The right tool isn’t just the cheapest—it’s the one that scales efficiently without hidden costs eating into its value over time.

Q3. Can Your Team Effectively Use This Tool?

Usability of an automation tool for testing isn't just about its ease of use—it determines how quickly teams can develop, execute, and maintain automated tests. If a tool requires specialized programming knowledge beyond the team's expertise, automation may stall or require costly training.

1. Code Complexity & Learning Curve

Some tools demand proficiency in scripting languages like Python, Java, or JavaScript, while others provide low-code or codeless interfaces. While low-code platforms accelerate onboarding, they might limit test customization. On the other hand, code-based frameworks allow deeper control but require technical expertise.

2. Onboarding & Skill Development

Even experienced teams face a transition period when adopting a new tool. The presence of step-by-step documentation, hands-on tutorials, and real-world examples significantly reduces learning friction. Tools with certification programs or structured training resources help teams ramp up more efficiently.

3. Community & Support Ecosystem

A tool with an active developer community, responsive support, and extensive knowledge bases allows teams to troubleshoot issues without waiting for vendor assistance. Forums, GitHub repositories, and API documentation indicate whether a tool is widely adopted or isolated.

Takeaway: An automation tool is only as good as your team’s ability to use it. Low-code/no-code solutions can reduce setup time but may still require domain-specific knowledge. On the other hand, script-based frameworks offer deeper control but demand coding expertise. Without clear documentation, structured training, or an active support community, even a powerful tool can become a bottleneck rather than a solution. The key is finding a tool that balances capability with accessibility, ensuring seamless adoption across skill levels.

Q4. Does It Offer Testing on Real Devices and Browsers?

Test results must reflect real-world conditions—what works in a simulated environment may fail on actual devices.

1. The Limitation of Emulators & Simulators

While simulators provide a controlled environment, they cannot replicate device-specific behaviors such as:

Touch gestures and UI responsiveness on mobile apps

CPU and memory constraints affecting application performance

Network latency, real-time GPS location, and battery consumption

For example, an iOS app that functions well on an emulator might crash on a real iPhone due to differences in hardware configurations.

2. The Need for Cross-Browser & Cross-Device Testing

A robust test automation tool should:

Support testing across multiple browsers (Chrome, Firefox, Safari, Edge) and versions

Offer access to real devices with different OS versions to check compatibility

Allow testing on physical devices or cloud-based real-device farms

3. Cloud vs. On-Premise Device Labs

Building an in-house device lab is expensive and requires continuous maintenance. Cloud-based testing services provide:

Instant access to hundreds of devices and OS versions

Scalability without physical infrastructure constraints

Ability to test globally without geographical limitations

Takeaway: Real-device testing is non-negotiable for reliable test automation. Prioritize tools that provide access to real-world testing environments, ensuring applications function seamlessly across devices, browsers, and user conditions.

Q5. Does It Have a Strong Reporting & Debugging Mechanism?

Automation is only as effective as its reporting capabilities—without clear, actionable reports, teams waste hours diagnosing failures.

1. Why Basic Reporting is Insufficient

A simple pass/fail log isn’t enough for debugging. Comprehensive reports should provide:

Detailed error logs indicating why a test failed

Screenshots or video recordings of execution steps

Stack traces and network logs for identifying root causes

For example, a UI test failing due to an element not found error could stem from:

A minor UI change in production

A delayed element rendering due to network latency

A broken dependency affecting the application

Without a detailed error snapshot, developers must manually re-run tests, slowing down resolution.

2. Integration with Test Management & CI/CD Pipelines

A reporting system should:

Integrate with defect tracking tools (JIRA, TestRail)

Provide customizable dashboards for monitoring trends

Allow real-time alerts in Slack or email when failures occur

3. Debugging at Scale

When running hundreds of automated tests per cycle, it’s inefficient to manually review logs. The best tools provide:

Automated log analysis to highlight trends in test failures

AI-powered failure categorization (e.g., UI issues vs. backend errors)

Parallel execution reports to track performance variations

Takeaway: A strong reporting and debugging system reduces downtime by pinpointing failures faster. When evaluating test automation tools, choose the ones with comprehensive logs, CI/CD integrations, and automated failure analysis to streamline test cycles.

Q6. Can the Tool Scale with Your Project’s Needs?

A tool that works well for a small project may struggle to scale when test volumes increase. Scalability depends on:

1. Parallel & Distributed Test Execution

As test suites grow, execution time should not become a bottleneck. Tools should:

Support parallel execution across multiple environments

Enable distributed testing on cloud grids or multiple machines

Allow test sharding (splitting tests across resources)

For example, running 10,000 test cases sequentially may take hours or days—but with parallel execution, it can be reduced to minutes.

2. Handling Large Data Sets & Performance Testing

Scaling is not just about test count; it’s about handling large volumes of data and high user loads. A scalable tool should:

Process large datasets efficiently without memory leaks

Support API performance and load testing

Provide resource monitoring during test execution

3. Maintainability at Scale

A tool that scales poorly increases test maintenance overhead. It should:

Automatically update test scripts when minor UI changes occur

Support reusable components to avoid script duplication

Allow configurable test environments to support multiple projects

Takeaway: Scalability ensures that test automation remains efficient as projects grow. Choose tools that support parallel execution, performance testing, and low-maintenance scalability strategies for future-proof test automation.

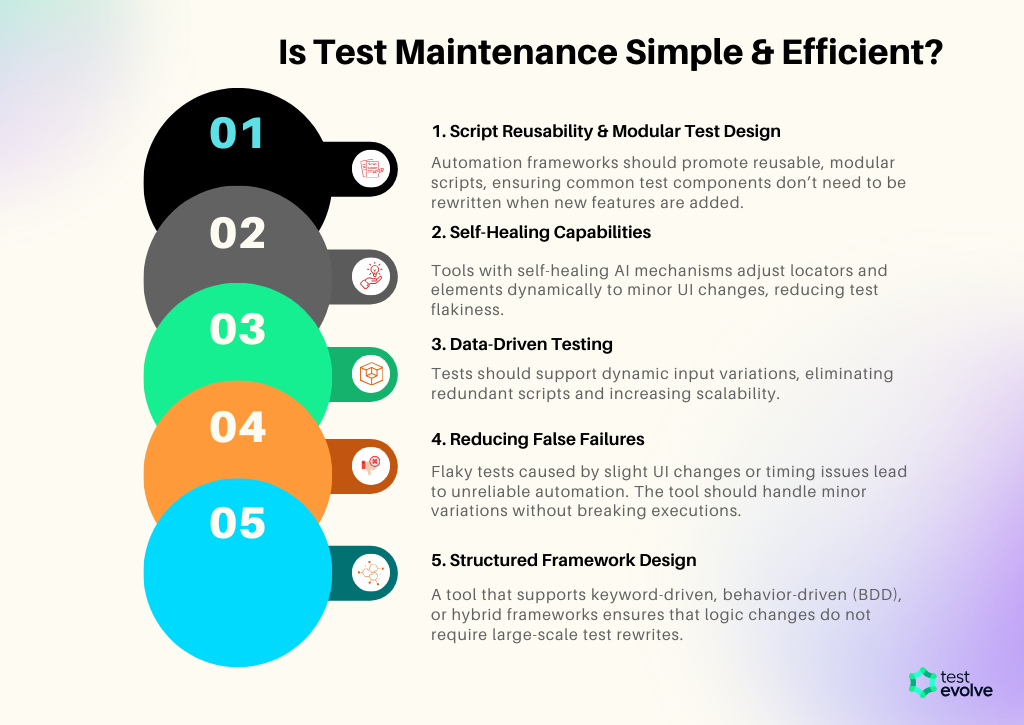

Q7. Is Test Maintenance Simple & Efficient?

Test automation is only valuable if it remains low-maintenance. As applications evolve, automation scripts must adapt to UI and functionality changes without constant rework. A robust tool should support:

1. Script Reusability & Modular Test Design

Automation frameworks should promote reusable, modular scripts, ensuring common test components don’t need to be rewritten when new features are added.

2. Self-Healing Capabilities

Tools with self-healing AI mechanisms adjust locators and elements dynamically to minor UI changes, reducing test flakiness.

3. Data-Driven Testing

Tests should support dynamic input variations, eliminating redundant scripts and increasing scalability.

4. Reducing False Failures

Flaky tests caused by slight UI changes or timing issues lead to unreliable automation. The tool should handle minor variations without breaking executions.

5. Structured Framework Design

A tool that supports keyword-driven, behavior-driven (BDD), or hybrid frameworks ensures that logic changes do not require large-scale test rewrites.

Takeaway: A test automation tool that lacks maintainability features leads to higher costs, wasted time, and unreliable test results. Prioritize tools with modular scripting, self-healing, and low-maintenance test architectures to ensure long-term efficiency.

Q8. Does It Offer Seamless Integration & Extensibility?

Automation tools don’t work in isolation—they must fit into the broader software development ecosystem. The ability to integrate with CI/CD pipelines, version control, bug tracking, reporting tools, and cloud platforms determines whether a tool enhances efficiency or becomes a bottleneck.

1. How Poor Integration Impacts Testing

CI/CD Delays: If test automation doesn't trigger builds or deployments seamlessly, release cycles slow down.

Data Silos: A tool that doesn’t sync test results with reporting dashboards forces teams to manually track defects, reducing visibility.

Limited Scalability: Without APIs and webhook support, teams struggle to customize or extend automation frameworks.

2. Industry-Specific Integration Needs

Finance: Requires automation tools that sync with compliance tracking systems for audit trails.

SaaS & DevOps: Needs deep CI/CD pipeline integration for real-time build verification.

E-commerce: Relies on real-device cloud testing for multi-location, cross-platform validation.

3. What to Look for in an Extensible Tool

API-first architecture for custom integrations

Plugin ecosystem for enhanced functionality

Seamless connectivity with test management, version control, and reporting tools

A tool with weak integration doesn’t just slow down testing—it limits automation’s ability to scale and adapt. Always assess native integrations, extensibility features, and customization options before committing.

Takeaway: Effective automation should be adaptive, reusable, and resilient to change. Tools that provide self-healing scripts, structured frameworks, and modular test components significantly cut down maintenance efforts. Ensuring low maintenance automation leads to greater test reliability, reduced downtime, and sustainable scalability.

Q9. Does It Provide Reliable Support & Community Engagement?

A robust test automation tool isn’t just about features. It’s about how quickly issues get resolved when things go wrong. Test automation inevitably encounters unexpected failures, environment-specific bugs, and framework compatibility issues. Without responsive support and an engaged community, teams lose valuable time troubleshooting instead of progressing.

Assess support by raising trial support tickets; track response times, resolution depth, and technical expertise. A tool with dedicated technical assistance, SLAs (Service Level Agreements), and engineering-backed support teams is more reliable than one relying solely on user forums.

Beyond official support, a thriving user community is a lifeline. Check developer forums, GitHub activity, Stack Overflow discussions, or Discord/Slack groups. Active communities drive faster real-world solutions and ensure the tool is widely adopted and evolving.

A tool with inconsistent updates, slow responses, or a stagnant community signals future risks. Reliable support isn’t a luxury; it’s critical for minimizing downtime and maintaining automation efficiency.

Takeaway: An automation tool that fails to integrate seamlessly disrupts workflows rather than enhancing them. Prioritizing solutions with robust API support, extensibility options, and compatibility with existing tools ensures that test automation scales effortlessly, supports evolving project needs, and eliminates workflow inefficiencies.

Q10. Is the Tool Secure & Compliant with Industry Standards?

Security and compliance are critical when selecting a test automation tool, especially for industries handling sensitive data. A tool lacking proper security controls can lead to data breaches, unauthorized access, and compliance violations, causing legal and reputational damage.

Compliance with Industry Standards: Ensure the tool adheres to GDPR, ISO 27001, SOC 2, or HIPAA for data protection. Organizations in finance, healthcare, and SaaS should prioritize tools with built-in encryption, access controls, and compliance certifications to mitigate legal risks.

Role-Based Access Control (RBAC) & Data Protection: RBAC restricts test access based on user roles, ensuring compliance. For example, financial services testers should not access backend transaction logs.

Secure Deployment & Updates: Prefer tools offering private cloud or on-premise deployment for regulated environments. Regular security patches and updates protect against evolving cyber threats.

Takeaway: A secure automation tool ensures data protection, regulatory compliance, and mitigates security risks in high-stakes industries.

Q11: Have You Conducted a Proof of Value (PoV) Before Purchase?

Selecting a test automation tool is a long-term commitment, and a Proof of Value (PoV) assessment helps determine whether it truly aligns with your project’s needs.

Many teams make decisions based on marketing claims or trial demos, only to later find out that the tool doesn’t scale well, lacks key integrations, or introduces maintenance overhead. A structured PoV helps mitigate these risks.

Steps to Conduct a Meaningful PoV:

Define Success Criteria: What does success look like for your team? Consider factors like execution speed, script maintainability, ease of integration, and ROI improvements.

Test Against Real Scenarios: Avoid ideal conditions—evaluate the tool on real workflows, actual test cases, and your CI/CD pipeline to see how it handles practical challenges.

Involve Key Stakeholders: Gather feedback from testers, developers, and DevOps teams to assess usability, debugging efficiency, and fit within the software development lifecycle.

Identify Potential Bottlenecks: If the tool struggles with complex scenarios, requires excessive maintenance, or slows down releases, it may not be a good long-term investment.

Takeaway: A well-executed PoV goes beyond surface-level testing. It reveals hidden limitations, ensures compatibility with your ecosystem, and provides clarity before financial commitment. Rushing this phase can result in an automation tool that creates more problems than it solves.

Conclusion

Choosing the right test automation tool is not just about selecting a feature-rich platform; it’s about ensuring long-term efficiency, scalability, and seamless integration into your development workflow. A tool that aligns with your testing requirements, tech stack, and business goals will streamline processes, reduce maintenance overhead, and ultimately drive higher software quality.

Use this checklist as a strategic guide to evaluate your options, ensuring the tool you select enhances team productivity, minimizes risk, and delivers measurable ROI. The best way to validate a tool’s capabilities? Put it to the test.

Want to see how a modern test automation tool fits into your workflow? Try Test Evolve’s 30-day trial and assess its impact on execution speed, debugging efficiency, and reporting quality firsthand.